LLM Chat Windows

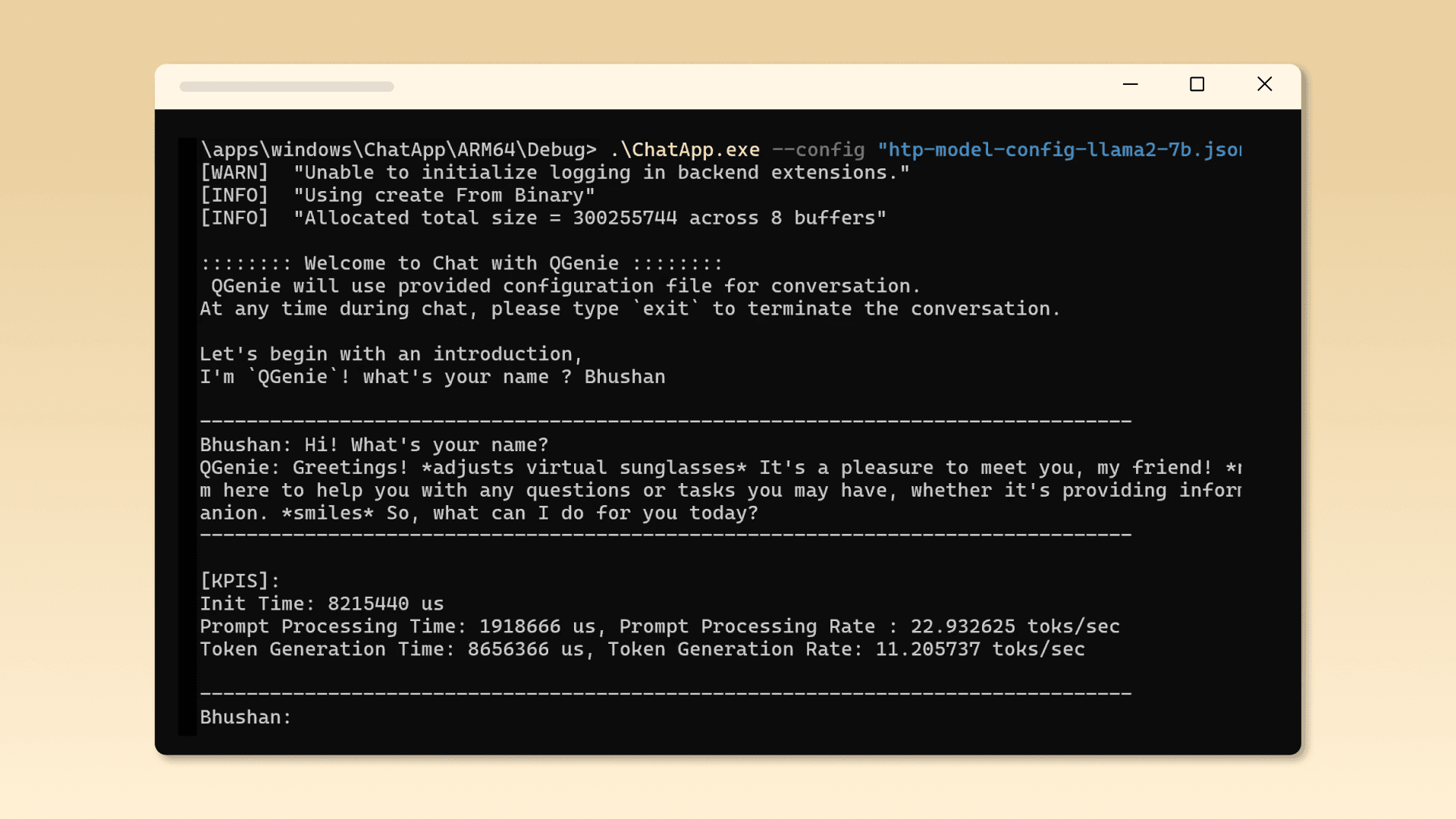

Deploy and run LLMs via CLI

A sample CLI app demonstrating how to integrate an on‑device LLM with Qualcomm® Gen AI Inference Extensions (Genie) APIs, optimized for Snapdragon® NPU acceleration.

1. Choose a compatible model

2. Get source code and dependencies

3. Build and run app

Compatible Models

App Information

Operating System

Windows 11+Language

C++Runtime

Qualcomm® Gen AI Inference Extensions (Genie)Use Case

Text Generation

Join us on Slack

Join our growing community of 2000+ developers in the AI Hub Slack Channel building the future of AI

Join Now