OpenAI-Clip

Multi‑modal foundational model for vision and language tasks like image/text similarity and for zero‑shot image classification.

Contrastive Language‑Image Pre‑Training (CLIP) uses a ViT like transformer to get visual features and a causal language model to get the text features. Both the text and visual features can then be used for a variety of zero‑shot learning tasks.

Technical Details

Model checkpoint:ViT-B/16

Image input resolution:224x224

Text context length:77

Number of parameters:150M

Model size (float):571 MB

Applicable Scenarios

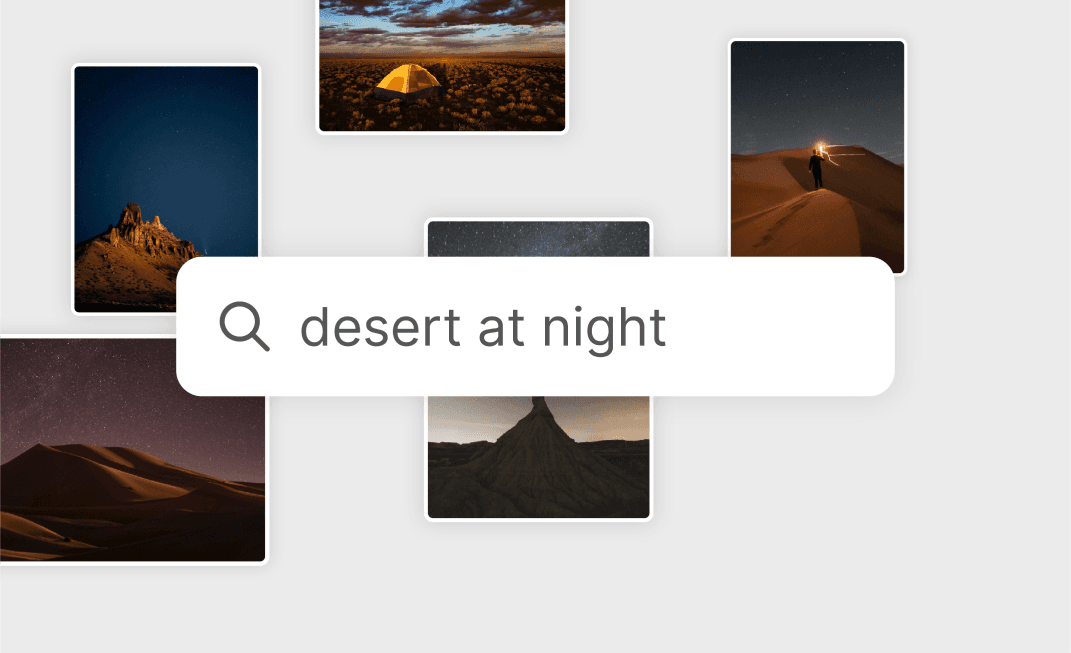

- Image Search

- Content Moderation

- Caption Creation

License

Model:MIT

Tags

- foundation

Supported Compute Devices

- Snapdragon X Elite CRD

- Snapdragon X Plus 8-Core CRD

Supported Compute Chipsets

- Snapdragon® X Elite

- Snapdragon® X Plus 8-Core

Looking for more? See models created by industry leaders.

Discover Model Makers