DeepLabV3-Plus-MobileNet-Quantized

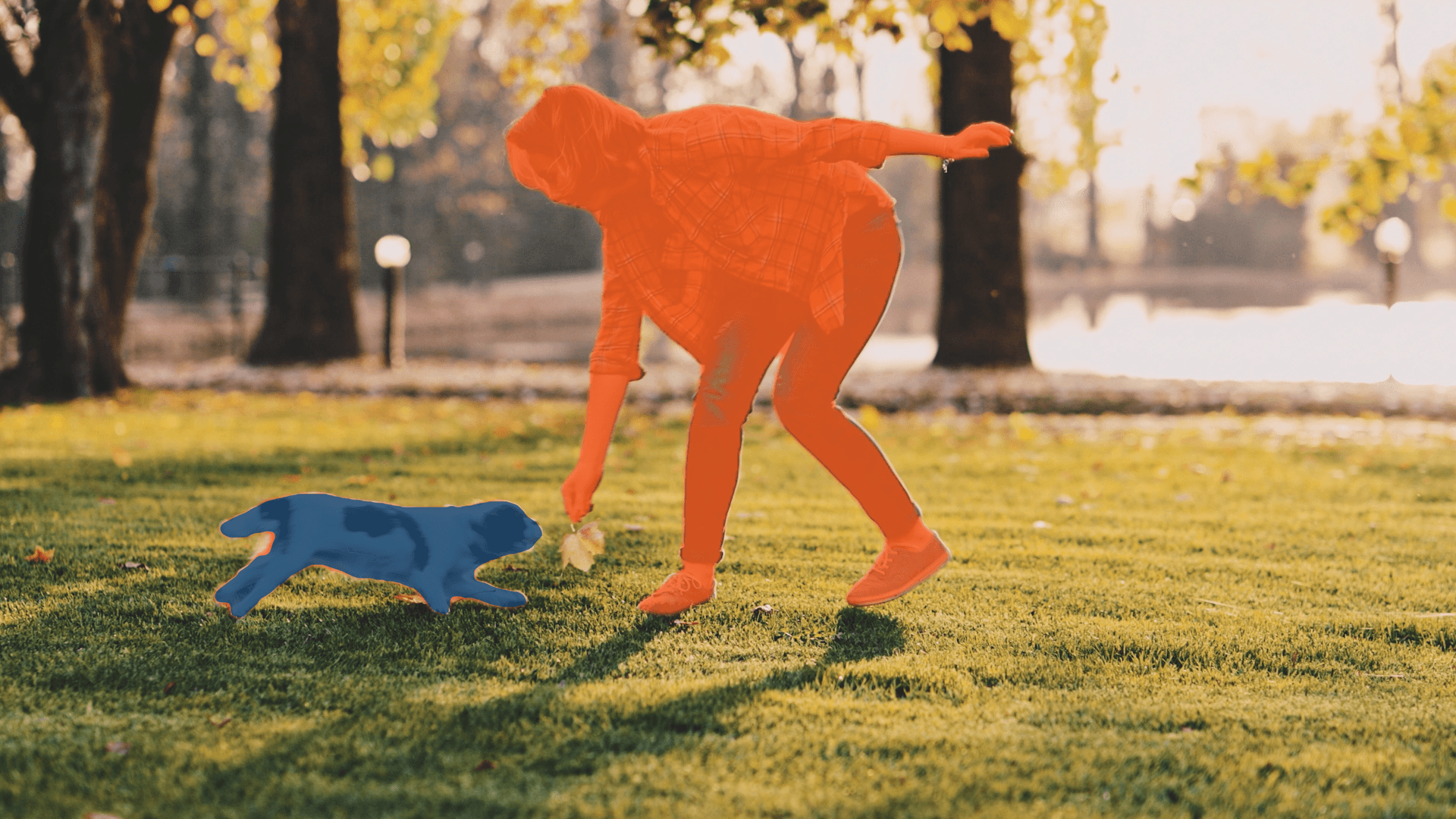

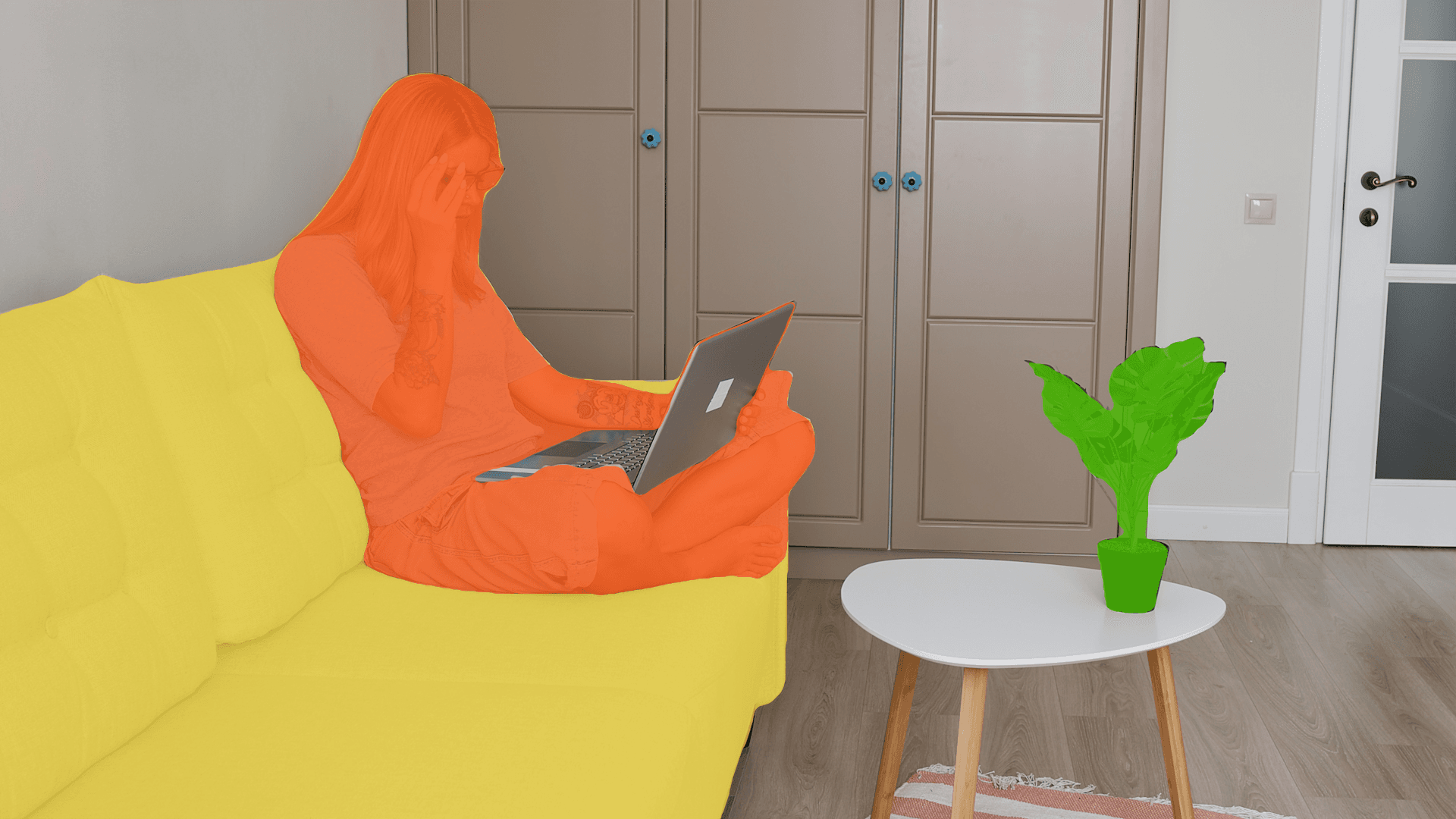

Quantized Deep Convolutional Neural Network model for semantic segmentation.

DeepLabV3 Quantized is designed for semantic segmentation at multiple scales, trained on various datasets. It uses MobileNet as a backbone.

Technical Details

Model checkpoint:VOC2012

Input resolution:513x513

Number of parameters:5.80M

Model size:6.04 MB

Number of output classes:21

Applicable Scenarios

- Anomaly Detection

- Inventory Management

Supported Form Factors

- Phone

- Tablet

- IoT

Licenses

Source Model:MIT

Deployable Model:AI-HUB-MODELS-LICENSE

Tags

- quantized

Supported Devices

- QCS6490 (Proxy)

- QCS8250 (Proxy)

- QCS8275 (Proxy)

- QCS8550 (Proxy)

- QCS9075 (Proxy)

- RB3 Gen 2 (Proxy)

- RB5 (Proxy)

- SA7255P ADP

- SA8255 (Proxy)

- SA8295P ADP

- SA8650 (Proxy)

- SA8775P ADP

- Samsung Galaxy S21

- Samsung Galaxy S21 Ultra

- Samsung Galaxy S21+

- Samsung Galaxy S22 5G

- Samsung Galaxy S22 Ultra 5G

- Samsung Galaxy S22+ 5G

- Samsung Galaxy S23

- Samsung Galaxy S23 Ultra

- Samsung Galaxy S23+

- Samsung Galaxy S24

- Samsung Galaxy S24 Ultra

- Samsung Galaxy S24+

- Samsung Galaxy Tab S8

- Snapdragon 8 Elite QRD

- Snapdragon X Elite CRD

- Snapdragon X Plus 8-Core CRD

- Xiaomi 12

- Xiaomi 12 Pro

Supported Chipsets

- Qualcomm® QCS6490 (Proxy)

- Qualcomm® QCS8250 (Proxy)

- Qualcomm® QCS8275 (Proxy)

- Qualcomm® QCS8550 (Proxy)

- Qualcomm® QCS9075 (Proxy)

- Qualcomm® SA7255P

- Qualcomm® SA8255P (Proxy)

- Qualcomm® SA8295P

- Qualcomm® SA8650P (Proxy)

- Qualcomm® SA8775P

- Snapdragon® 8 Elite Mobile

- Snapdragon® 8 Gen 1 Mobile

- Snapdragon® 8 Gen 2 Mobile

- Snapdragon® 8 Gen 3 Mobile

- Snapdragon® 888 Mobile

- Snapdragon® X Elite

- Snapdragon® X Plus 8-Core

Related Models

See all modelsLooking for more? See models created by industry leaders.

Discover Model Makers