Mobile

Enabling intelligent connections and personalized applications across devices

Sample Ready Apps

Super Resolution

Sample application to deploy an optimized super resolution solution on device

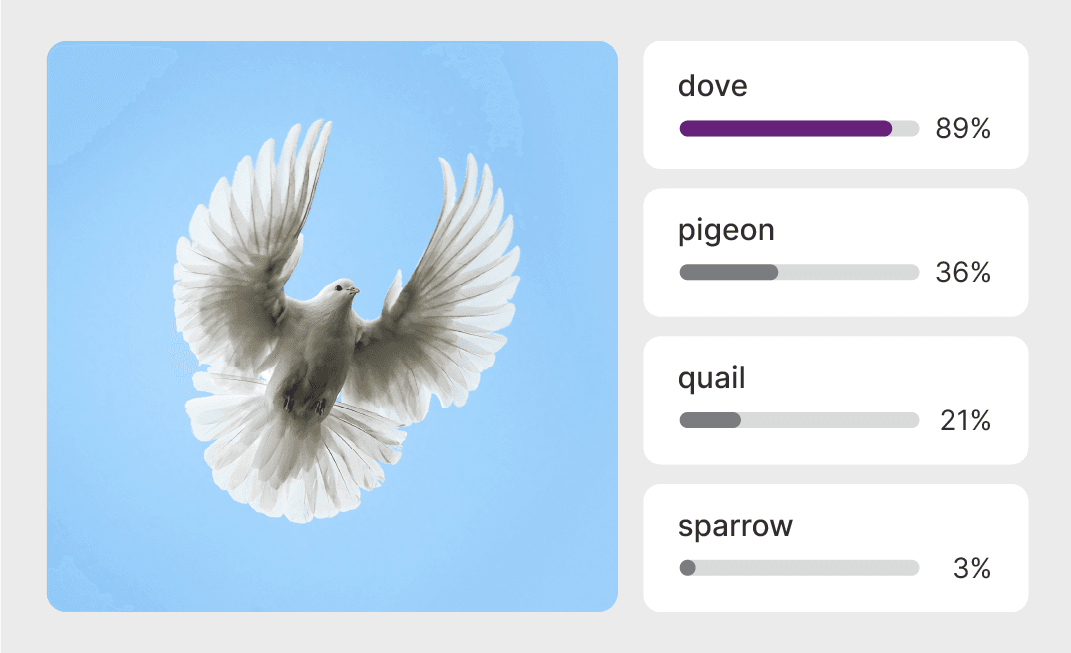

Image Classification

Sample application to deploy an optimized image classification solution on device

Semantic Segmentation

Sample application to deploy an optimized semantic segmentation solution on device

Filter by

Domain/Use Case

Chipset

Device

Model Precision

Runtime

Tags

- A “backbone” model is designed to extract task-agnostic representations from specific data modalities (e.g., images, text, speech). This representation can then be fine-tuned for specialized tasks.

- A “foundation” model is versatile and designed for multi-task capabilities, without the need for fine-tuning.

- Models capable of generating text, images, or other data using generative models, often in response to prompts.

- Large language models. Useful for a variety of tasks including language generation, optical character recognition, information retrieval, and more.

- A “real-time” model can typically achieve 5-60 predictions per second. This translates to latency ranging up to 200 ms per prediction.

291 model variants (158 models)